Interactive Digital Electronics Workshop @ East Carolina University | April 19th, 2018 | Greenville, NC

What is Digital Interactivity?

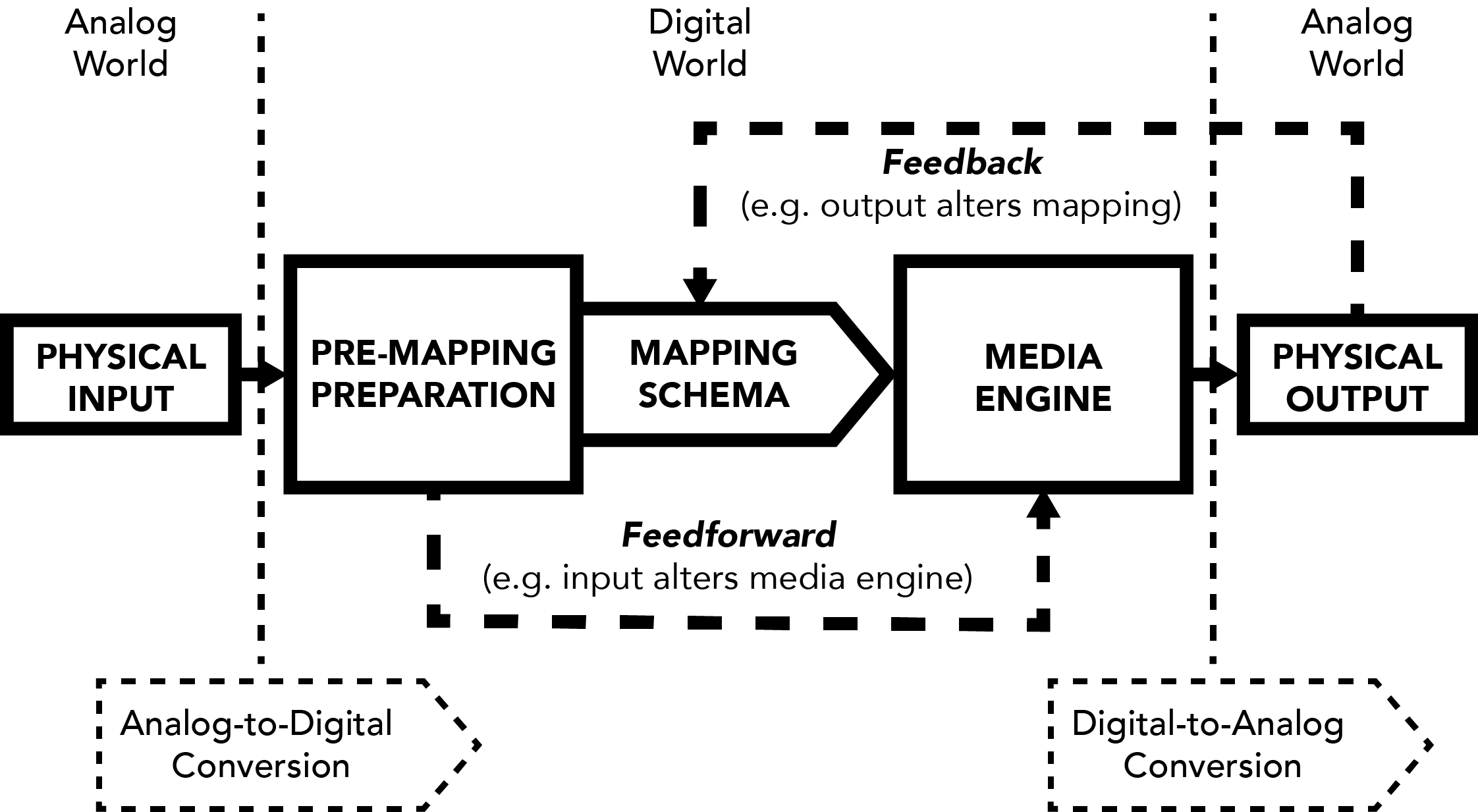

Unfixed Media Flow Chart (One View of Interactivity)

PHYSICAL INPUT

A device that picks up signal from the real world which may then be turned into digital data via Analog-to-Digital Conversion

Examples: Microphones, Video Cameras, Control Surfaces, Motion-Tracking Systems

PRE-MAPPING PREPARATION

A process of preparing raw, recently digitized physical input in order to map it

Examples: Scaling, Smoothing, Filtering, Event Detection, Segmentation, Feature Extraction

MAPPING SCHEMA

A designed/composed connection between the prepared physical input and the media engine, at micro (gesture), meso (phrase), and macro (formal) levels

Examples:

Simple: microphone input volume mapped to audio file playback volume; video camera movement mapped to different audio files

Complex: different live oboe melodic fragments being mapped to a set of effect parameters and spatialization trajectories depending on current section of piece; locations of a performer's ten fingers / how hard they're squeezing being mapped to five in-series sound processing effects

MEDIA ENGINE

A live, responsive media generator/processor (in the digital and/or analog world)

Examples: A set of prepared audio files triggered live, a system of effects applied to live input, a set of synthesizer patches, a sound-producing circuit

PHYSICAL OUTPUT

A device that brings the output of the media engine into the real world via Digital-to-Analog Conversion

Examples: Loudspeakers, Transducers, Video Projectors, Lighting Systems, Motors

Interaction Discussion

Humans who perceive and alter the system (performers, users) are part of the interactive network

Uni-directional interaction is not interaction at all; interaction must at least be a static loop (e.g. execution-evaluation cycle)

The definition of the interaction (how elements in the system interact) may be static or dynamic

Dynamism may come from:

Feedforward (e.g. input altering parameters of the media engine (responsive to input));

Feedback (e.g. output altering the design of the mapping schema (responsiveness to output))

Composing the transparency or perspicuity of interaction design/dynamism ("how does that work?") can be very compelling

The process of designing interaction may be:

Top-down (starting with a desired result and defining the input, mapping schema, and media engine according)

Middle-out (combining/collaging previously-defined interactive systems into a new interaction)

Bottom-up (experimenting with inputs, mapping schemas, and sound/video to ultimately define an interaction)

Interaction design pitfalls:

Lack of robustness, repeatability (e.g. event triggers only when microphone is set ~just~ right)

Interaction not legible (too complex, e.g. an audience is unable to perceive causal relationships between input and output in the interaction)

Interaction it too one-to-one ("mickey-mousing", e.g. an audience becomes bored by a static, simple relationship)

Artists/Technologists/Friends Doing Interesting Things in This Field

Instrument Designers/Performers: Atau Tanaka, Laetitia Sonami, Fang Wan, Akiko Hatakeyama, Jon Bellona, Matthew Burtner, Nicholas Collins

Interactive Electronics + Acoustic Instruments: Pierre Boulez (Repons), Kaija Saariaho, Natasha Barrett, Eric Lyon, Christopher Biggs, Elainie Lillios

Media Designers/Dance: Golan Levin, Kyle McDonald, Mario Klingemann, Chunky Move, Recoil Performance Group

More Tools (click to go to webpage)

Jitter Computer Vision (cv.jit) - Max tools for intelligent video processing/feature extraction

Zsa.descriptors - Max tools for real-time advanced sound analysis and description

Max for Live - embeds Max within Ableton Live (Digital Audio Workstation)

Wekinator - easy to use software for real-time, interactive machine learning (mapping)

Processing - programming language for making visuals and learning how to code (check out my introduction HERE)

SuperCollider - programming language for audio synthesis and algorithmic composition

Gibber - creative coding environment for audiovisual performance and composition (runs in browser)